OpenAI's T+1 expansions

Any product idea that you’ll ever see is always coming from a T+1 expansion. Given the world today, including its problems and solutions that already exist, what can a better solution look like? We humans always combine existing things. Nobody can just "skip" a step. However, when doing the iteration quickly, you can have impressive progress in a short amount of time that from outside is often hard to follow and looks like a big jump.

So is the progress of OpenAI — it’s a chain of T+1 expansions, and by being incredibly fast in bringing one expansion after another, a few years in - the territory that OpenAI covers is vast.

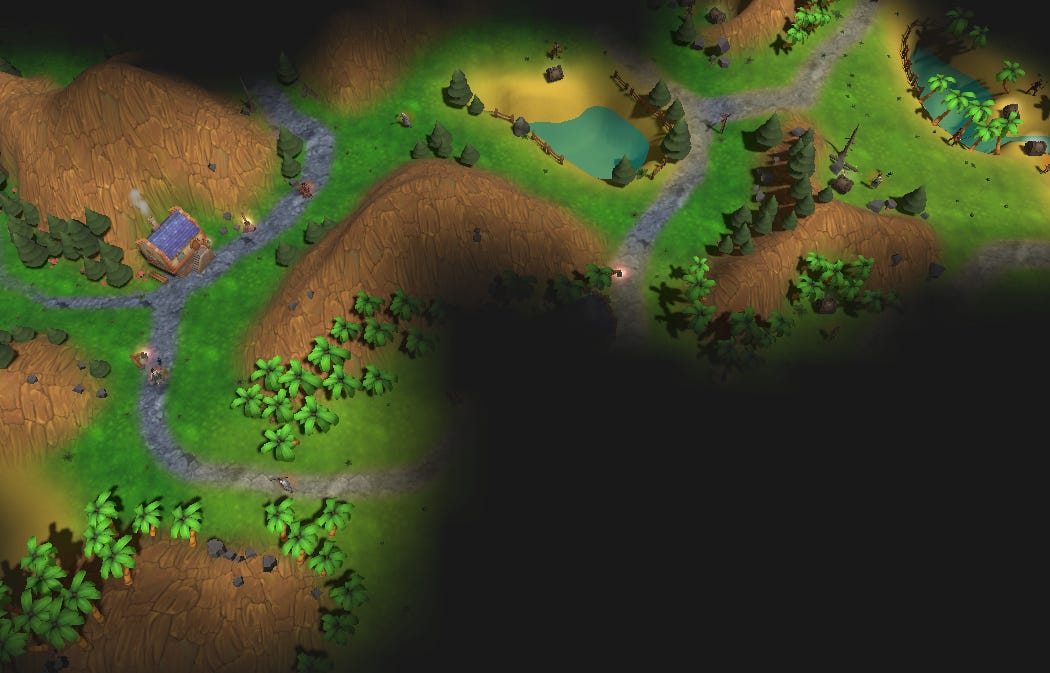

I'm imagining this like a game of Warcraft or Settlers. You have a fog of war, which is area that is dark, you can't see it and with that you also don't have conquered it.

Now, let's put ourselves into the shoes of a gen ai founder who just looked at OpenAI's offerings this year after GPT-4 came out and tried to find an opportunity, in other words, trying to find dark spots on the map, which OpenAI doesn’t control yet. Some of the obvious places that were still "dark spots" on OpenAI's map and how OpenAI just expanded on them today:

Customized models for enterprises that can run on-prem. Emad Mostaque, CEO of StabilityAI observed this and wanted to collaborate with companies all around the world, counter-positioning OpenAI with decentralized models. This is now covered by custom models you can train with OpenAI for $2-3M. Yes it's a lot of money, but for the value these models will provide, OpenAI understands that this price is probably even cheap.

Saving costs: Finding a way to get the same quality of service for less money. OpenPipe is one example - training smaller models based on GPT-4 responses to save costs. Some use-cases here are now less urgent, as GPT-4 Turbo has a 3x cost reduction.

Chat with your data: OpenAI just released a whole SDK what makes it easy to chat with your data. You can now upload any file (they're supporting most mime types) which OpenAI will turn into a useful chat bot for you. You can connect any internal functions and call them with the same assistant. While this is far from an autonomous agent, it's covering the most popular use-case of LangChain and LlamaIndex: Talk to your documents.

Well-structued output: Getting a reliable JSON output from OpenAI was hard until recently, and you needed to use threatening language like "I'll take a life" - this is not necessary anymore and we can now use GPT-4 Turbo reliably as an API.

Long Context: I used Claude 2 quite a bit to chat with larger PDFs such as books. This is not needed anymore with Assistants but also GPT-4 Turbo 128k context in general.

Given, that OpenAI has now expanded further in all these directions today, what will come next? What is another T+1 step from here? It's clear that when building a startup today if the startup is within the T+1 reach of OpenAI for its next iteration cycle, there's a significant risk involved.

So, what are some ways to be protected against that? How can you build a startup today that's not prone to being "eaten" or "killed" as people like to say on Twitter X by the next round of OpenAI announcements?

I'll write about that in the coming days. For now, let's just acknowledge the speed at which OpenAI is shipping. This is truly inspiring.